Can I use a camera with a colour sensor and convert it to a black-and-white image (monochrome) and expect the same result as a camera with a monochrome sensor?

When you acquire a photo using a digital camera a lot is going on inside the camera based on your setting.

Image format is normally set to JPG but you may know that there is a RAW setting. The RAW setting means that the image is stored as it is acquired by the sensor.

When shooting in JPEG mode, the camera’s internal software applies colour interpolation, white balance, sharpening, noise reduction, and compression – resulting in image quality loss and reduced flexibility for editing.

So why not just take the RAW image and convert it to black and white? That should give the same quality. After all, the camera is starting from RAW images. Well, the answer is a definitive no, and a comparison between the Monochrome and non-monochrome versions of the camera will show you why.

Each pixel in the sensor is an individual photo site, often called a well. When photons enter the photo site, they hit a light-sensitive semiconductor diode, or photodiode, and are converted into an electrical current that directly corresponds to the intensity of the light detected. This signal is amplified on pixel and then sent to an analogue-to-digital converter, which converts it into digital format and sends it to an image processor.

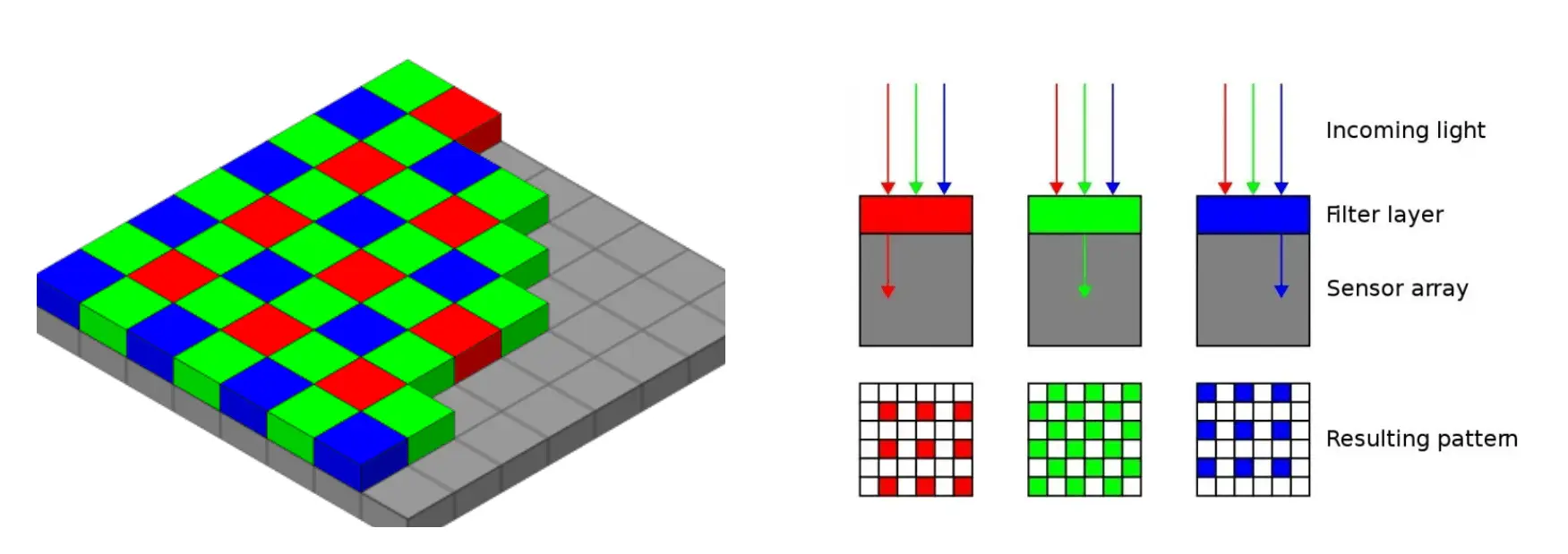

However, pixels detect light, not colour, so a camera sensor by itself can only produce black-and-white images. To create colour images, a colour filter array needs to be added. A colour filter array is a pattern of individual red, green, and blue colour filters arranged in a grid – one for every pixel.

Since green is the colour that our eyes are most sensitive to there are two green, one blue and one red for each grid of 2×2 pixels. This is easiest to see in each corner of the squares above.

A black-and-white camera sensor, also known as a monochrome sensor, works similarly to a colour sensor but with a key difference: it lacks the colour filter array.

The removal of the colour filter array has several advantages:

Increased sharpness:

Without the colour filters blocking certain wavelengths of light, each pixel receives more light. This results in an image with increased sharpness and clarity.

Improved sensitivity to light:

The sensor becomes more sensitive to light, which can improve performance in low-light conditions.

Greater tonal range:

Black and white sensors can capture a greater range of tones from black to white, resulting in images with rich gradations of grey.

Chromatic aberration:

Chromatic aberration, also known as colour fringing or purple fringing, is an optical phenomenon where a lens fails to focus all colours to the same point. This results in colour distortions along the edges of objects in an image.

Conclusion:

The question is whether non-monochrome cameras can match the performance of monochrome cameras in producing black-and-white images. And the answer is no.

Monochrome cameras, with their lack of colour filter array and higher sensitivity, provide sharper, cleaner black-and-white images with better detail and dynamic range.